The LCD panels most commonly used today are TFT LCD panels. Depending on how liquid crystal molecules are driven, they can be classified into three types: TN, VA, and IPS. Each type has its own strengths and weaknesses, so it is important to select the most suitable driving method according to the intended use. Some people may want to understand what types of LCD panels exist and use that knowledge as a guideline for purchase and operation. In this article, we explain the types of LCD panels, their features, and key points for comparison.

TFT LCD Panels Are the Current Standard

LCD panels use liquid crystal molecules sealed inside glass to produce color images. LCD panel performance has continued to improve, and today the most common method is known as TFT LCD. TFT LCD panels are classified into three types depending on how the liquid crystal molecules are driven. First, let us look at what LCD panels and TFT LCD panels are.

What Is an LCD Panel?

Liquid crystal is a state of matter that has characteristics of both liquids and solids (crystals), existing between the two. Liquid crystal molecules are long and rod-shaped, and while they maintain directional order, they also have fluidity.

Depending on their orientation, physical properties such as refractive index and dielectric constant change.

An LCD panel consists of a layer containing countless liquid crystal molecules sandwiched between two polarizing filters and glass substrates with transparent electrodes. However, because liquid crystal molecules themselves do not emit light, this structure alone cannot function as a display.

For this reason, a backlight is placed behind the glass substrate.

Voltage is applied through the substrate to change the alignment of the liquid crystal molecules, adjusting the amount of light that passes through or is blocked by the polarizing filters.

What Is a TFT LCD Panel?

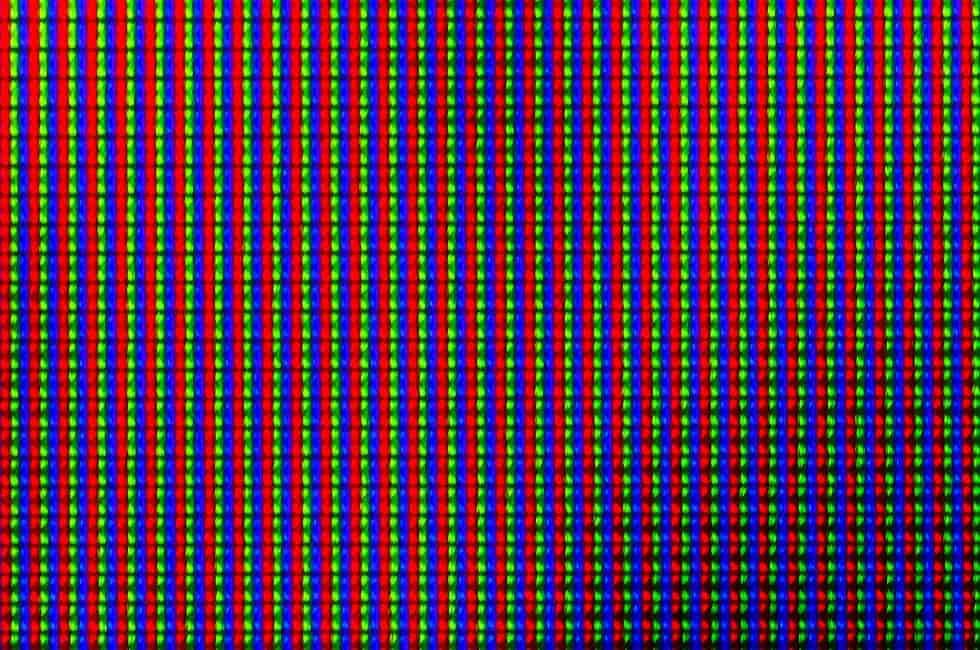

Most LCD panels currently on the market use a method called TFT LCD. TFT LCD panels adopt an active-matrix system in which an active element made of a thin-film transistor (TFT) is placed at each pixel.

Each pixel consists of three subpixels—red, green, and blue (RGB, the three primary colors of light). The TFT assigned to each subpixel controls voltage precisely, allowing accurate color reproduction through combinations of RGB.

Countless TFTs function as voltage switches, changing the transmittance of the liquid crystal layer corresponding to each microscopic pixel, which enables the display to produce a wide range of colors.

Three Types of TFT LCD Panels: TN, VA, and IPS

TFT LCD panels can be broadly classified into three types depending on how liquid crystal molecules are controlled: TN, VA, and IPS.

Details of each type are explained later, but their general characteristics are summarized below.

- TN (Twisted Nematic)

When voltage is off, liquid crystal molecules are twisted in layers. Light quantity is controlled by vertical and horizontal twisting. - VA (Vertical Alignment)

When voltage is off, liquid crystal molecules align vertically to the glass. Light quantity is controlled by vertical tilting. - IPS (In-Plane Switching)

When voltage is off, liquid crystal molecules align horizontally to the glass. Light quantity is controlled by horizontal rotation.

There are also IPS-derived technologies in practical use:

- AH-IPS (Advanced High Performance IPS)

Dveloped by LG Electronics. Achieves high transmittance, high contrast, and low power consumption. - AHVA (Advanced Hyper-Viewing Angle)

Developed by AU Optronics. Features fast response speed. - ADS (Advanced Super Dimension Switch)

Developed by BOE. Features wide viewing angles of 178° vertically and horizontally, with minimal image change depending on viewing position.

Characteristics, Advantages, and Disadvantages of TN Panels

The TN method was commercialized in 1970 and was the dominant driving method until the 1990s. It is a “normally white” TFT LCD panel, appearing white when voltage is off and black when voltage is on. Below, we explain how TN panels work and their advantages and disadvantages.

How TN Panels Work

TN panels control the twisted state of the liquid crystal layer by adjusting voltage strength, thereby regulating the intensity of light transmitted from the backlight. The liquid crystal layer is sandwiched between two polarizing filters oriented 90 degrees apart.

When voltage is off, liquid crystal molecules align with each polarizer near the surface and twist gradually within the layer. Because the polarization of the backlight rotates along the twist, light passes through when voltage is off.

When voltage is applied, the liquid crystal layer rises vertically, breaking the twisted structure. At maximum voltage, polarization no longer rotates and the backlight is blocked.

Advantages of TN Panels

TN panels have the following advantages:

- Simple structure and low cost

- High power efficiency, enabling high brightness with low power consumption

- Extremely fast fall response (white to black)

- Supports refresh rates up to 240Hz

In short, the main strength of TN panels is that high-refresh-rate LCD panels can be obtained at low cost.

Disadvantages of TN Panels

The major disadvantage of TN panels is significant color and brightness changes depending on viewing angle.

Because transmittance is controlled by twisting liquid crystal molecules, viewing the screen from an angle can cause noticeable differences compared to viewing from the front.

In addition, when horizontally twisted liquid crystal molecules are raised vertically, their alignment becomes uneven.

As a result, complete light blocking is not possible, causing the screen to appear whitish and making it difficult to achieve a high contrast ratio.

Characteristics, Advantages, and Disadvantages of VA Panels

VA panels are “normally black” TFT LCD panels, appearing black when voltage is off and white when voltage is on. Unlike TN panels, which adjust twisting from horizontal to vertical, VA panels adjust tilt from vertical to horizontal. Due to this difference, VA panels excel at black representation.

How VA Panels Work

VA panels apply voltage to rotate liquid crystal molecules from a vertical to a horizontal position, controlling light transmittance by adjusting the tilt angle. When power is off, liquid crystal molecules align vertically to the glass surface and almost completely block the backlight. When voltage is applied, the molecules tilt horizontally, and at maximum voltage they become fully horizontal, allowing the most light to pass through.

Advantages of VA Panels

VA panels offer the following advantages:

- Extremely high light-blocking performance, enabling deep blacks

- Very high contrast ratio

- Minimal screen whitening even in dark environments

In short, the key strength of VA panels is their ability to display very pure blacks.

Disadvantages of VA Panels

Compared to other driving methods, VA panels have slower response times. They are not well suited for smoothly displaying fast-moving images. Additionally, because light quantity is controlled by vertical tilt, brightness changes depending on viewing angle. While not as severe as TN panels, color and brightness shifts with viewing angle remain a drawback. Some products mitigate this by controlling tilt direction by area, achieving wider viewing angles.

Characteristics, Advantages, and Disadvantages of IPS Panels

Like VA panels, IPS panels are “normally black” TFT LCD panels. Unlike TN and VA panels, IPS panels do not tilt liquid crystal molecules vertically. Overall performance is high, and their greatest advantage is extremely wide viewing angles.

How IPS Panels Work

IPS panels apply voltage to rotate liquid crystal molecules horizontally, controlling light transmittance through rotational adjustment. When voltage is off, liquid crystal molecules align horizontally to the glass and are oriented 90 degrees to the polarizer slits, blocking the backlight.

When voltage is applied, the molecules rotate horizontally, and at maximum voltage they align with the slits, allowing the most light to pass through.

Advantages of IPS Panels

IPS panels have the following advantages:

- Many products offer wide viewing angles of 178° vertically and horizontally

- Minimal color and brightness changes with viewing angle

- Balanced overall performance

Because IPS panels do not tilt molecules vertically, their greatest strength is their extremely wide viewing angles.

Even when viewed from extreme angles, color reproduction remains stable, making IPS panels useful in situations where TN and VA panels struggle.

Disadvantages of IPS Panels

IPS panels have no major weaknesses, but compared to the strengths of TN and VA panels, the following points can be considered disadvantages:

- Slightly slower response times, making fast-moving images less suitable

- Light leakage even when blocking light, making high contrast harder to achieve

- Higher manufacturing cost, resulting in higher prices

How to Choose a TFT LCD Panel

TN, VA, and IPS panels each have different strengths, and the optimal driving method depends on usage.

TN panels excel in refresh rate and low cost.

VA panels excel in black representation.

IPS panels offer superior viewing angles and overall balance.

Key Points for Comparison

When comparing LCD panels, consider the following five factors:

- Viewing angle

The angle at which the screen can be viewed correctly horizontally and vertically. - Color shift

Inaccurate color reproduction caused by pixel displacement. - Transmittance

The proportion of light that passes through the display. - Contrast ratio

The ratio between the brightest white and darkest black. - Response time

The time required for color transitions such as black → white → black.

Strengths and Weaknesses Comparison

| Type | Strengths | Weaknesses |

| TN | Fast response, high transmittance | Narrow viewing angles, color shift |

| VA | Very high contrast ratio | Slower response speed |

| IPS | Extremely wide viewing angles, minimal color shift | Higher cost |

Suitable Uses by Panel Type

Due to performance differences, each panel type has suitable and unsuitable applications.

- TN: Single-user gaming monitors and sports viewing

- VA: High-quality gaming and movie viewing

- IPS: Design work, general use, and digital signage

Consider Brightness and Durability for Digital Signage

When using LCD panels for digital signage, continuous operation performance is required. For outdoor use, the following points are important:

- Brightness of at least 700 cd/m²

- High durability and weather resistance

- Heat-resistant design using cooling systems

Summary

TFT LCD panels are available in three types: TN, VA, and IPS. TN panels are suitable for single-user gaming, VA panels are ideal for movies, and IPS panels offer high overall performance and wide viewing angles.

When using LCD panels for digital signage, brightness, durability, and heat resistance are also important considerations.