If you’ve spent any time looking at gaming monitors over the last few years, it’s almost impossible not to run into labels like “G-Sync Compatible” or “FreeSync Premium” printed right on the box or pushed to the top of a product page. I used to treat those names as background noise, something that sounded technical but didn’t really change how a monitor felt once it was on my desk. That view changed gradually, after moving between different displays, GPUs, and even consoles, where the gap between G-Sync vs FreeSync stopped being theoretical and started affecting how games actually looked and behaved during long sessions.

Both systems are meant to deal with tearing and uneven motion, but they don’t reach that point in the same way, and over time those differences begin to show up in small, everyday moments rather than in spec sheets. This piece isn’t about declaring one winner, but about how these technologies settled into my own setup and habits, and what that meant across different games and hardware.

What Is Adaptive Sync — and Why It Matters

Before putting FreeSync vs G-Sync next to each other, it helps to step back and think about why adaptive sync entered my daily gaming life in the first place.

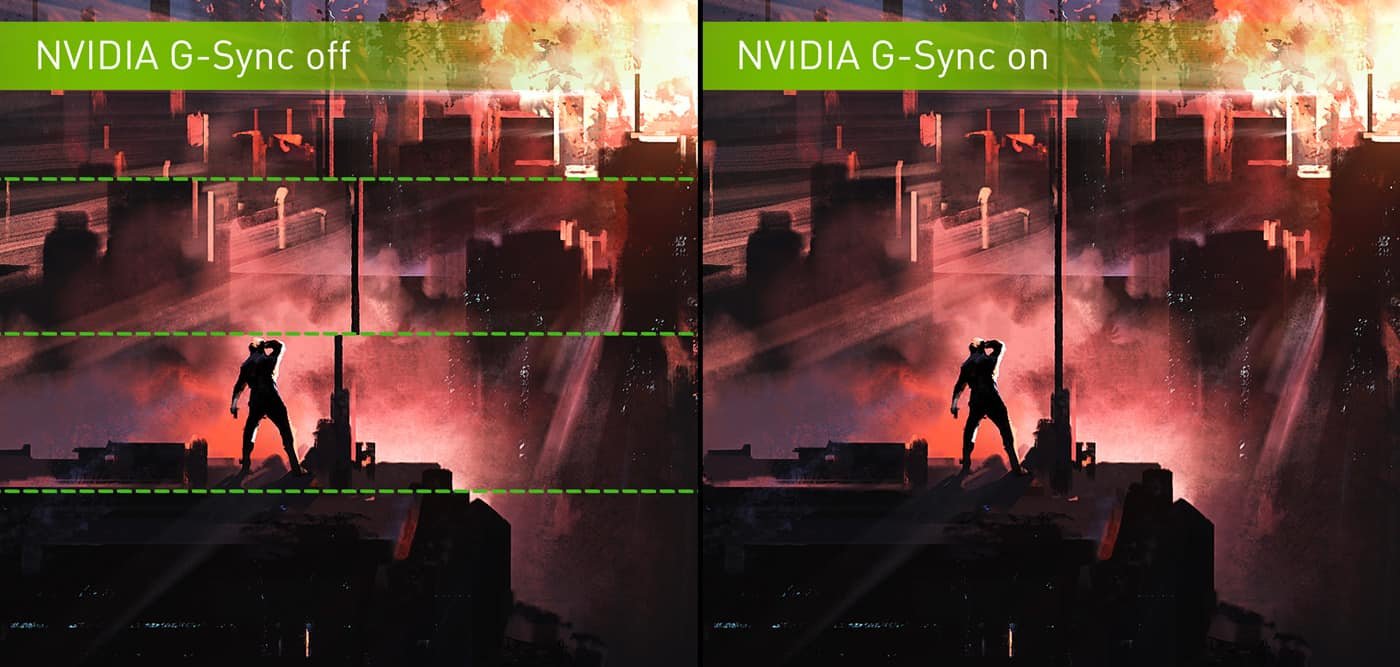

I noticed early on that some games felt fine one evening and strangely uneven the next, even when the settings hadn’t changed. Frame rates drifted, scenes tore slightly when turning quickly, and there was a sense that the image and the system were no longer moving together. Adaptive Sync stepped in by letting the screen follow the graphics card instead of running on its own fixed rhythm, which quietly changed how motion felt without drawing attention to itself.

Once I got used to that behavior, going back to a monitor without it felt distracting in ways that were hard to explain unless you’d lived with both for a while. That adjustment period is what made Adaptive Sync stop feeling like a checkbox and start feeling like a baseline expectation.

The names most people run into here are still the same two:

- AMD FreeSync

- NVIDIA G-Sync

What Is G-Sync?

G-Sync entered my setup during a period when I was already deep into NVIDIA hardware, and it made its presence felt without announcing itself. The idea of a dedicated module inside the monitor sounded excessive at first, but over longer use it became clear why NVIDIA insisted on that approach.

What stood out wasn’t a dramatic change, but consistency. Whether a game hovered around 50 frames or climbed past 100, motion stayed calm, without sudden jumps or visual breaks that pulled attention away from the screen. Fast camera movement felt steadier, and longer sessions didn’t leave me with the sense that something was subtly off.

What stayed with me about G-Sync:

- Screen tearing stopped being something I thought about

- Frame pacing felt predictable across different games

- The pairing with NVIDIA GPUs felt natural

- Higher-end versions leaned into HDR and high refresh panels

“After a while, the absence of tearing mattered more than the presence of any headline feature.”

That approach comes with a trade-off. Displays built around G-Sync usually sat higher on the price ladder, and the requirement for DisplayPort meant fewer options if I wanted flexibility. It worked best when everything in the chain stayed within NVIDIA’s ecosystem.

What Is FreeSync?

AMD FreeSync entered my routine from the opposite direction, not as a statement piece but as something quietly built into more monitors than I expected. It leaned on existing standards rather than added hardware, and that difference showed up immediately in availability.

Moving between several FreeSync displays over time, I noticed how varied the results could feel. Some panels handled motion with ease, while others needed a bit of tweaking before everything settled down. The upside was choice. There were far more sizes, refresh rates, and price points to work with, and that flexibility mattered when upgrading or repurposing a setup.

What FreeSync brought into daily use:

- Access to a broad range of monitors

- Lower entry cost without abandoning variable refresh

- Compatibility that extended beyond just AMD cards

- Higher tiers that addressed low frame rate behavior and HDR handling

The tier system mattered more than I expected. Basic FreeSync felt different from Premium, and once LFC entered the picture, low frame dips became less distracting during heavier scenes.

AMD FreeSync vs NVIDIA G-Sync: Core Differences

| Aspect | FreeSync | G-Sync |

|---|---|---|

| Dedicated module | No | Yes |

| Typical pricing | Lower entry points | Higher cost |

| GPU pairing | AMD and many NVIDIA cards | NVIDIA-focused |

| HDR handling | Premium Pro tier | Ultimate tier |

| Input lag feel | Consistently low | Very tightly controlled |

| Panel variance | Depends heavily on the model | More uniform |

| HDMI support | Common | Limited |

Placed side by side, the difference settled into a question of control versus flexibility. G-Sync stayed predictable across situations, while FreeSync depended more on the specific screen in front of me.

FreeSync Premium vs G-Sync: HDR, LFC, and Higher Tiers

As displays moved forward, the naming became more layered, and I found myself paying less attention to the badge and more to how the screen behaved late at night or during longer play sessions.

FreeSync Premium and Premium Pro addressed issues that only appeared once frame rates dipped or HDR entered the mix, while G-Sync Ultimate leaned into brighter panels and higher resolutions. What mattered most, though, was the panel itself. Two monitors with similar labels could feel entirely different once they were actually used day after day.

Is G-Sync the Same as G-Sync Compatible?

They share a name, but they don’t behave the same way. Native G-Sync displays felt tightly managed, while G-Sync Compatible monitors leaned more on validation than hardware.

In use, the difference wasn’t dramatic, but it existed. Around the edges of refresh ranges or during sudden drops, native G-Sync felt calmer, while Compatible models sometimes needed a bit more adjustment. Still, for most setups, the trade felt reasonable.

FreeSync vs G-Sync vs Adaptive Sync

Over time, the naming stopped feeling confusing and started making sense:

- Adaptive Sync as the shared foundation

- FreeSync as AMD’s take on that standard

- G-Sync Compatible as NVIDIA’s approval layer

- G-Sync as a hardware-driven approach

They all aim to keep the screen and GPU moving together, but the path they take shapes how flexible the setup feels.

Console Support and Cross-Platform Compatibility

Once consoles entered the setup alongside a PC, flexibility started to matter more. Xbox Series X and S worked comfortably with FreeSync and HDMI VRR, while PlayStation 5 leaned strictly on HDMI Forum VRR.

Native G-Sync displays stayed focused on PC use, which made FreeSync-based monitors easier to live with when switching between systems.

Final Verdict: G-Sync vs FreeSync

After living with both, the choice stopped being about labels and started being about how a monitor fit into my day. Native G-Sync felt controlled and predictable when paired with NVIDIA hardware. FreeSync felt adaptable, easier to match with different systems, and less restrictive when upgrading or repurposing gear.

With current GPUs blurring the lines between ecosystems, the badge matters less than the screen behind it. Panel behavior, comfort over long sessions, and how the display reacts under changing frame rates ended up shaping my preference far more than brand alignment.